I need to make the following necessary disclaimer: I have worked successfully in talent acquisition for 25 years. I am not an artist. Nothing I do is an art or magic. Everything I do is a science.

I make this disclaimer because I feel we as TA professionals realize there is truth in this, and realize that this truth is a key to advancing our profession. However if you have an inability to articulate and quantify what, why, and how you do what you do, please don’t confuse the science of what you do with art or magic.

There is nothing wrong with having a hunch or feeling about something related to TA, whether it is a tool or a technique. That’s a natural part of intellectual curiosity. It is also possible that through breadth, depth, and years of experience, the accuracy of those hunches and feelings will increase.

My concern arises with 1) How we as TA leaders position and communicate our hunches/theories/feelings/ideas, and 2) Our repeated and inexcusable lack of effort and/or ability to measure the outcomes and veracity of our statements in regard to said tools and/or techniques.

In our industry, the most visibly guilty are the “pop culture thought leaders” and vendors, but no less guilty is the working stiff TA leader, and in the end they may have the greatest impact. So what to do?

Let’s start with the Pop Culture Thought Leader, not be confused with actual thought leaders. You know the type. They see a 5 minute Ted Talk on YouTube and become gospels of the “word,” or through tremendous broad leaps and bounds of logic, declare a new product or vendor the “Uber/Zappos/Facebook/Match.com” of recruiting. They also declare silly things like “the one question that will change your recruiting game,” or “Why insert grit/chutzpah/love of the color puce here is the most important measure of a candidate.”

My concern arises from the fact that these hunches/theories/feelings/ideas are not presented as such, but by using their position they espouse this as more than just a theory, and often without doing due diligence to discover if the theory has been previously validated or not.

I would have no issue if folks in this position said “I have a theory/idea/guess,” and some do, but most don’t. Unsuspecting readers wrongly assume that these are “experts” and have vetted their ideas, or at the very least are measuring their new ideas and will provide some more detailed follow up guidance later. Nope. That almost never happens, and that sucks. I believe that the continued proliferation of “non-expert” experts has done more to slow the positive evolution of TA than anything else. They offer confusion but not accountability.

Now vendors, who often have a tool to prove, make pseudo-scientific claims to validate their work, but more often than not it is based on insufficient testing, or huge (dare I say YUGE) assumptions on their part. As an example: “I have a tool that can increase your quality of hire,”but when asked to define quality of hire they can’t articulate a true quality metric. Or: “Video interviewing can reduce your time to fill.” How do you know that if you don’t know 1) My current time to fill, or 2) If it even is an issue?

I can appreciate making the stab in the dark, I get it, we’ve all got to eat. But a white paper based on a single case study with one customer does not prove a darn thing, particularly when at most you can only show correlation, and you know it.

TA leaders (of which I am one) are swamped. We don’t always have the luxury or time to measure and analyze data, particularly if we are looking to isolate causality. It takes serious sweat equity. Even if we do develop evidence past anecdotal levels, we don’t often share our research and allow others to see if it is replicable. I’ve been in TA for 25 years, and have only had one study appear in a peer-reviewed journal, and that was a serious team effort to get that done, so I get the difficulty.

So what I’m asking is threefold. First the easy one: be clear if something is simply an idea/theory/hunch. You can share your bona fides, but those bona fides are no indication that your idea/theory/hunch will be any more effective that what someone is doing now. If you want to be an all star, ask folks to report their results (measurably not anecdotally), analyze that data, and share it at a later date: be a true expert.

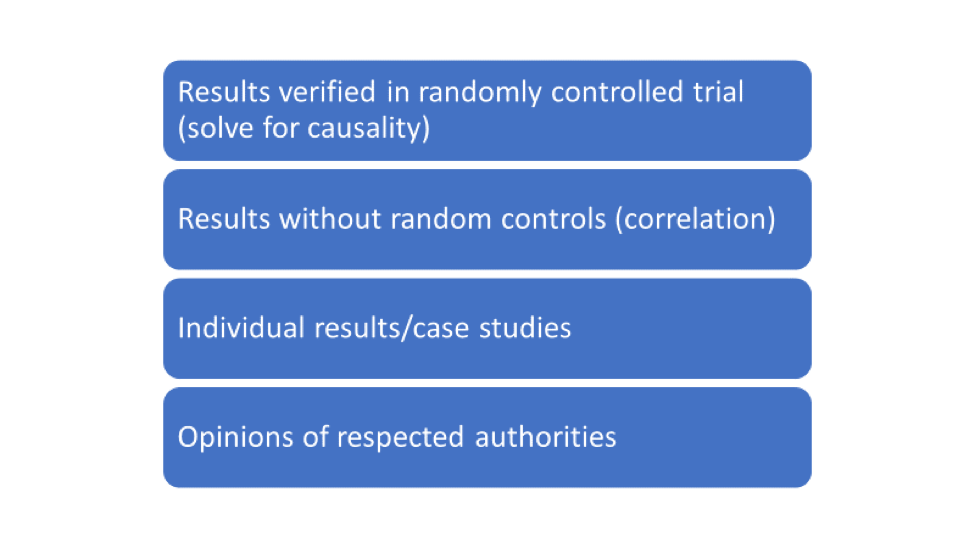

Second, let’s implement an evidence-based process for TA decision making. It has been used for some time in healthcare, and I have a theory/idea/hunch that it could be easily implemented in the TA space. In a nutshell, the more documented, measurable, replicated proof you have, the higher the value that is placed on a theory. Simple. It could look something like you see in the graphic.

This of course is overly simplified, to spur discussion.

Finally, share, share, share. We have more accessibility to each other as TA pros than ever before. Use that to share ideas and ask others to try the same thing and validate your results. Exercise the discipline of a true professional and measure data and analyze the results. If you want to play in the big leagues, do the work!

To close, I would love to hear other thoughts on the concept of evidence-based recruiting, and how you would operationalize it. Do not give me your excuses of why you can’t do it; excuses are like ears, everyone has more than one. It’s time for TA to put on our big league britches, so let’s work together to do that!