In every vendor demo and promise‑filled conference room, AI remains the miracle cure for everything that ails your hiring process. You know the “proof points” – cut time‑to‑hire in half, double the candidate quality, scale infinitely.

And for a while, these promises of better hiring through better technology sounded great. Automation, after all, meant fewer scheduling emails and less endless updating of manual spreadsheets or candidate profiles. But what we actually automated wasn’t recruiting. It was empathy.

What we seem to have forgotten is that speed and quality are often inversely correlated, and that experience is all about the means, rather than the ends – which, of course, is the complete opposite of AI’s foundational value proposition.

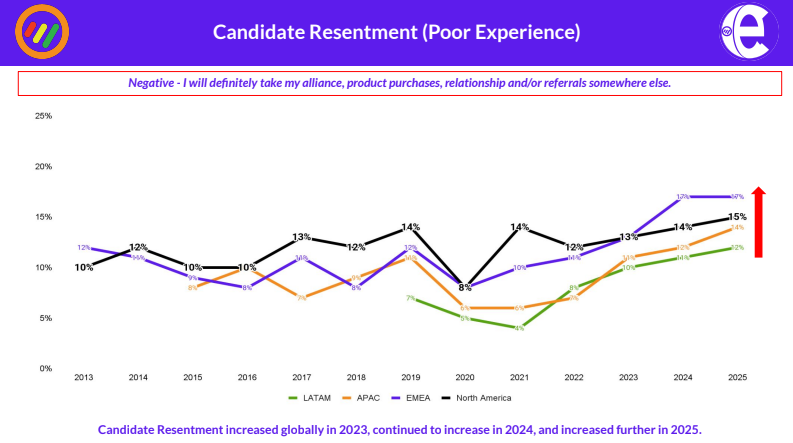

Studies suggest that while talent acquisition professionals largely share a belief that overall, AI has improved their TA efforts, they are also not convinced that it’s actually making the candidate experience better – and a whole lot of respondents seem to think it’s actually made it worse.

This shift from experience to efficiency is a pyrrhic victory for recruiters and TA leaders at best. You can build a pipeline full of the top talent in the market, but everyone essentially has access to the same candidate databases or talent pools.

LinkedIn Recruiter has a 92% market share among enterprise employers, and is often the largest line item in enterprise TA budgets. When everyone has access to the same information, insights and data (like many LLMs), that data has limited value and creates no inherent competitive advantage – unlike, say, traditional direct sourcing and candidate qualification.

Instead, what separates hiring success from failure has nothing to do with sourcing pipeline, and everything to do with how companies engage with, and activate, that pipeline.

If none of your candidates feel seen, heard, respected or appreciated, AI isn’t going to help you hire faster. It’s just going to enable you to lose trust and employer brand equity faster – and promises to puncture a pretty significant hole in even the strongest of pipelines. If you think it was hard getting passive candidates to engage with real recruiters, watch what happens when you rely exclusively on automated “artificial intelligence.”

“We Fixed Recruiting,” Said Every Vendor Just Before Breaking It

There’s a myth that technology solves human problems. In recruiting, it makes problems scalable – and often, so unfixable that they become an entrenched part of the process, features that started off as fixable bugs (see: ATS and data portability).

Look at the rise of “conversational intelligence agents,” which is a fancy VC way of saying “chatbot.” These early “AI recruiting” solutions promised immediate candidate communication, faster feedback loops, automated prescreening and higher conversion rates. Instead, they quickly devolved into the HR Tech equivalent of automated phone systems.

“Your call is very important to us. Please dial the extension of the party you wish to reach. If you do not know your party’s extension, please hang up now.”

And for all of its potential for eliminating bias from talent decisions, AI just seems to filter out candidates faster, without explaining why, and ensures that unconscious bias remains an inextricable part of the TA process.

Only instead of lived experiences or personal perspectives, our bias now inherently reflects our belief that automation is superior to personalization, and that algorithms should be trusted above instinct. According to another recent peer reviewed study from Computer Law and Security Review, AI‑driven recruiting systems raise significant fairness challenges despite claims of objectivity.

Candidate experience used to mean something. Even when that meaning was a bit hackneyed and asinine. Sure, it was probably a bit Quixotic, to put it mildly. But still, the trend towards focusing on candidate experience was a good faith effort by employers to do right by their candidates by doing good (or at least, the bare minimum).

Sure, not everyone heard anything back about every job they applied for. But when you did get a bite, it wasn’t a prompt asking you to self-schedule an automated assessment before a human even has the chance to review your resume.

It was a real call, from a real recruiter – and even when the news wasn’t good, chances are, the recruiters on the other end of the line provided some sort of encouragement, maybe even some constructive feedback or advice on applying in the future.

This was what candidate experience looked like in practice. Applicants and candidates were treated as people, not profiles.

The recruiting process was treated like a conversation, not a transaction. And while the industry still had a ton of work to do (understatement of the year) when it came to fixing the candidate experience, at least the work was being done. Now, with AI, that work has largely become undone.